Quantum computing has long been hailed as the next frontier in technology, promising to solve problems that are currently beyond the reach of classical computers. After 17 years of relentless research, Microsoft believes it has achieved a groundbreaking milestone with the development of its Majorana 1 quantum processor. This innovation could pave the way for quantum computers to tackle industrial-scale challenges, from drug discovery to climate modeling.

In this article, we’ll explore Microsoft’s quantum computing breakthrough, the science behind the Majorana 1 processor, and its potential to transform industries. Whether you’re a tech enthusiast, a researcher, or a business leader, this deep dive will provide valuable insights into the future of computing.

What is Quantum Computing, and Why Does It Matter?

The Basics of Quantum Computing

Unlike classical computers, which use bits (0s and 1s) to process information, quantum computers use qubits. Qubits can exist in multiple states simultaneously, thanks to the principles of superposition and entanglement. This allows quantum computers to perform complex calculations at unprecedented speeds.

The Challenge with Qubits

Despite their potential, qubits are notoriously fragile. They are highly sensitive to noise and interference, which can lead to errors and data loss. For years, tech giants like IBM, Google, and Microsoft have been working to make qubits more stable and reliable.

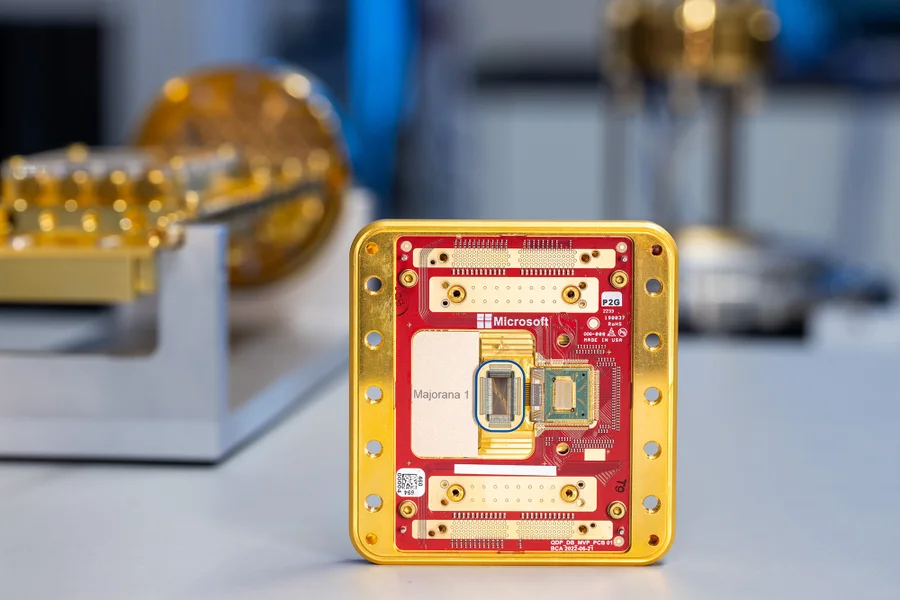

Microsoft’s Majorana 1 Processor: A Game-Changer

The Majorana Particle: A Theoretical Marvel

At the heart of Microsoft’s breakthrough is the Majorana particle, a theoretical concept first described by physicist Ettore Majorana in 1937. These particles are unique because they are their own antiparticles, making them highly stable and resistant to external disturbances.

Microsoft’s researchers have successfully harnessed Majorana particles to create topological qubits, a new type of qubit that is more robust and less prone to errors.

The World’s First Topoconductor

To achieve this, Microsoft developed a topological superconductor, or topoconductor, a new material made from indium arsenide and aluminum. This material allows researchers to observe and control Majorana particles, enabling the creation of reliable qubits.

The Majorana 1 processor, which fits in the palm of your hand, currently houses eight topological qubits. However, Microsoft envisions scaling this up to 1 million qubits on a single chip, a feat that could revolutionize computing as we know it.

The Science Behind the Breakthrough

A New Architecture for Quantum Computing

Microsoft’s approach is fundamentally different from traditional quantum computing architectures. Instead of relying on electrons, the Majorana 1 processor uses Majorana particles to perform computations. This innovation addresses one of the biggest challenges in quantum computing: error correction.

Peer-Reviewed Validation

Microsoft’s research has been published in the prestigious journal Nature, providing scientific validation for its claims. The paper details how the company’s team of researchers, scientists, and technical fellows achieved this milestone after years of dedicated effort.

The Potential Impact of Microsoft’s Quantum Breakthrough

Solving Real-World Problems

A quantum computer with 1 million qubits could tackle problems that are currently unsolvable with classical computers. Here are just a few examples:

- Drug Discovery: Simulating molecular interactions to accelerate the development of new medicines.

- Climate Modeling: Creating more accurate models to predict and mitigate the effects of climate change.

- Material Science: Designing new materials with unprecedented properties, such as superconductors that work at room temperature.

A Gateway to Scientific Discovery

According to Chetan Nayak, Microsoft Technical Fellow, “A million-qubit quantum computer isn’t just a milestone—it’s a gateway to solving some of the world’s most difficult problems.”

Microsoft’s Quantum Journey: 17 Years in the Making

A Long-Term Commitment

Microsoft’s quantum computing program is the company’s longest-running research initiative, spanning 17 years. Zulfi Alam, Corporate Vice President of Quantum at Microsoft, describes the Majorana 1 processor as a “fundamental redefinition” of the quantum computing landscape.

Collaboration with DARPA

Microsoft’s breakthrough has earned it a spot in the final phase of the Defense Advanced Research Projects Agency (DARPA)’s Underexplored Systems for Utility-Scale Quantum Computing (US2QC) program. This collaboration will accelerate the development of a fault-tolerant quantum computer based on topological qubits.

What This Means for the Future of Computing

A Scalable Quantum Architecture

Microsoft’s topological qubits are not only more reliable but also scalable. This means that the company’s quantum architecture can be expanded to accommodate more qubits without compromising performance.

A Competitive Edge in the Quantum Race

While companies like IBM and Google have made significant strides in quantum computing, Microsoft’s focus on topological qubits gives it a unique advantage. By addressing the challenges of error correction and scalability, Microsoft is positioning itself as a leader in the quantum computing race.

Expert Insights: What Industry Leaders Are Saying

We reached out to Dr. Sarah Thompson, a quantum computing researcher at MIT, for her perspective on Microsoft’s breakthrough.

“Microsoft’s development of topological qubits is a significant step forward in the quest for practical quantum computing. If they can scale this technology to a million qubits, it could unlock capabilities that were previously unimaginable. This is a game-changer for industries ranging from healthcare to energy.”

Challenges and Opportunities Ahead

Overcoming Technical Hurdles

While Microsoft’s breakthrough is impressive, there are still challenges to overcome. Scaling up to 1 million qubits will require significant advancements in materials science and engineering.

Ethical and Security Considerations

Quantum computing also raises important ethical and security questions. For example, quantum computers could potentially break current encryption methods, necessitating the development of new cryptographic techniques.

Conclusion: A New Era of Computing

Microsoft’s Majorana 1 processor represents a pivotal moment in the evolution of quantum computing. By harnessing the power of Majorana particles and topological qubits, Microsoft has laid the foundation for a new era of computing that could transform industries and solve some of humanity’s most pressing challenges.

As the company continues to refine its technology and collaborate with organizations like DARPA, the dream of a scalable, fault-tolerant quantum computer is closer than ever. The future of computing is quantum—and Microsoft is leading the charge.

Key Takeaways

- Microsoft’s Majorana 1 processor uses topological qubits based on Majorana particles, offering greater stability and scalability.

- The company has developed a topological superconductor, a new material that enables the control of Majorana particles.

- A quantum computer with 1 million qubits could revolutionize industries like healthcare, climate science, and material science.

- Microsoft’s research has been validated in a peer-reviewed paper published in Nature.

- The company is collaborating with DARPA to accelerate the development of fault-tolerant quantum computers.

By pushing the boundaries of quantum computing, Microsoft is not only advancing technology but also creating opportunities to address some of the world’s most complex problems.